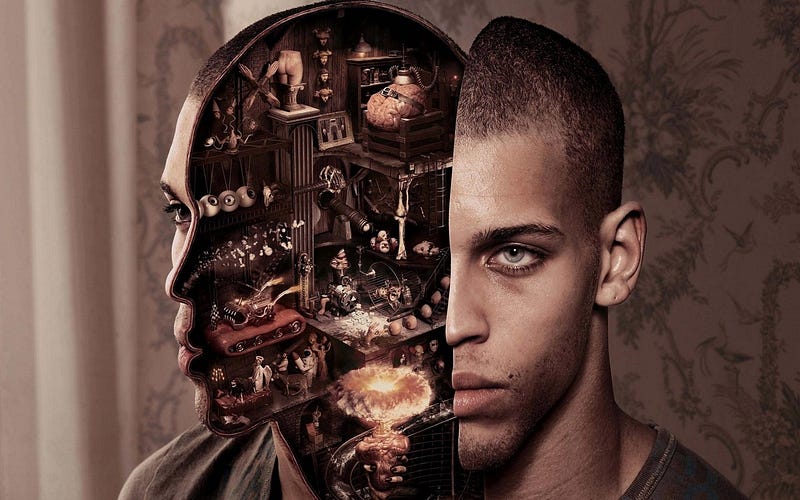

“I got a language in my head that I don’t speak. It’s not just digital, it’s alien. Every day I wake up different, modified.” — Cyborg (Justice League)

In the movie Justice League, Victor Stone is saved by his scientist father. Victor is now powered part by ai, part by his human brain. He keeps self-improving and adapting to point beyond which he can control. He develops so fast that he merges with all the world’s available digitised data. He can hack, decode, control. He is a machine that learns or a “machine learning” human-machine.

Victor is uncomfortable with this new power, he laments his loss of humanity, his loss of his former self.

Now imagine, Victor was not human, imagine he was an algorithm, imagine he had no feeling for humanity in any way. Who ever controls that algorithm could control the world’s data. Controlling the world’s data would mean controlling the world.

“Artificial intelligence is the future, not only for Russia, but for all humankind” — Vladimir Putin

Naturally those who programme Ai will be programming that Ai with their own bias, their own context, their own ethics. The data that feeds that Ai will also be biased data, for all our knowledge and thus data is cumulative, built on previous existing knowledge. All that knowledge contains the shape of the world in which it existed, the bias, the zeitgeist, the ethics.

This Thursday Thought is influenced by the great science fiction writer and guest on this week’s innovation show Edward M. Lerner. On the show and in his book “Trope-ing the light fantastic”, Edward talks of ethics in AI. When it comes to programming ethics, we need to be aware that ethics evolve.

“B-Ai-S”

Ai, AGI and machine learning centre around machines which self-learn in a compound fashion, but they need to be programmed to begin with. If you think of them as sophisticated algorithms which need “input” to start a snowball effect of learning, then the data inputted will massively influence the data outputted. The initial data becomes extremely important to the constantly evolving end result.

“Bias in — Bias Out”

Ai systems which are heavily reliant on data can easily become biased. This all comes down to where the data initial comes from. If the data Ai learns from is biased in the first place, then of course the output will be biased.

This is referred to as “Bias-In, Bias-Out” by Professor Barry O’Sullivan, Director of The Insight Centre for Data and Analytics in University College Cork, Deputy President of the European Artificial Intelligence Association and previous guest on this Innovation Show.

Crash and Burn Bias — Microsoft’s “Tay”

“The more you chat with Tay the smarter (more biased) she gets.” — Microsoft

Many companies are and have been experimenting with conversational interfaces. Amazon has commissioned students to build computer programs called socialbots that can hold spoken conversations with humans.

Microsoft launched such a project with an Ai twitter chatbot called Tay. According to Microsoft it was to “experiment with and conduct research on conversational understanding”. It was targeting 18–24 year olds on twitter, so thus it was “interacting” with them.

Tay was “exposed” to a barrage of abusive language, political and pro Hitler tweets. Eventually Tay tweeted “Hitler was right” and “9/11 was an inside job”. Tay had learned from such interactions.

Yes, Tay was hijacked by the community and yes was “fed” drivel by social media trolls, but things became so bad that the Tay project was shut down after a mere 24 hours.

This type of bias is called “Interaction Bias” and it can be malicious, but there is so much historical bias in the world due to evolution of (hu)mankind that it is hard to stamp bias out and get to a ground truth.

The world is male biased as we discussed in a previous post, but what happens when we programme Ai for Ethics?

The (Other) Turing Test

Alan Turing is widely considered to be the father of theoretical computer science and artificial intelligence. Turing is most recognised for his use of computers to crack Nazi codes during World War 2. Turing played a pivotal role in cracking intercepted coded messages that enabled the Allies to defeat the Nazis in many crucial engagements and in so doing helped win the war.

Surely, we celebrated this great mind and decorated him?

Alan Turing was prosecuted in 1952 (just over half a century ago, 6.6 decades, 66 years) for homosexual acts, under the Labouchere Amendment, “gross indecency” was a criminal offence in the UK. He accepted chemical castration treatment as an alternative to prison. Turing died two years later, 16 days before his 42nd birthday, when this great mind had so much more to offer.

Why do I mention this?

If Ai was programmed with the “ethics” of 1952, Ai would use the “ethics” of that era as a guideline. Ai would consume the data of its time to learn and adapt and like Victor Stone (Cyborg) would wake up everyday different, modified, improved, just like Tay did.

As we may look back over the last century and condemn ourselves (as a human race) for mass atrocities, genocide, war, chemical castration, how will we view the atrocities that are considered acceptable today? Ai is being developed at a rapid pace. How do we define good and bad and teach it to AI when good and bad evolve?!?

AI is already part of our world, it provides optimisation, data analysis, all manner of efficiencies, improved cybersecurity and a myriad other opportunities. We are reaching the upper echelons of weak Ai and only embarking on the journey of strong Ai. Many of us cannot see how quickly this is happening, it is happening at an exponential rate. Where it will end, we do not know for sure, but those of us interested know the general direction.

“The actual path of a raindrop as it goes down the valley is unpredictable, but the general direction is inevitable” — Dr. Kevin Kelly

As Edward Lerner shares on this episode: It is vital for us to understand that AI is a product of algorithms which are also products of humans who are inevitably biased. That is why we need to understand the technology almost too well for a better future with better justice.

Imagine we programmed autonomous weapons with the “ethics” of 1952? Imagine these weapons self learned based on the initial information they received?

Imagine we hard coded ethics without a failsafe, with no way to evolve as humanity evolves, as ethics evolve?

I leave you this week with some great dialogue from Zach Snyder’s “Justice League”…

Bruce Wayne: “… that’s what science is for. To do what’s never been done. To make life better.”

Wonder Woman: “Or to end it. Technology is like any other power. Without reason, without heart, it destroys us.”

IF YOU LIKED THIS, PLEASE “LIKE IT”, SO OTHERS WILL SEE IT

Ep 117: The Science behind Science Fiction: Augmented Humanity, AI, Super intelligence with Edward M. Lerner

This episode is with one of the leading global writers of hard science fiction and indeed cyber fiction, Edward M. Lerner. He is author of over 18 titles and what we hope is fascinating for followers of this show is the science he puts behind the fiction. As opposed to fantasy writing, science fiction is based on possible realities and that fact is often lost on many of us.

He is a physicist and computer scientist, he toiled in the vineyards of high tech for thirty years, as everything from engineer to senior vice president. Once suitably intoxicated, he began writing full time.

The focus of this show is themes from his book: ‘Trope-ing the Light Fantastic: The Science Behind the Fiction’.

We discuss:

- Augmented Humanity

- Cyborgs

- Robots

- Genetic Therapy

- Brain Machine Interfaces

- Autonomous Weapons

- AI, Artificial Intelligence

- Superintelligence

- Neural Networks

- Dystopia

- The future skills of humanity

- What we do when everything automated

Have a Listen:

Soundcloud https://lnkd.in/gBbTTuF

Spotify http://spoti.fi/2rXnAF4

iTunes https://apple.co/2gFvFbO

Tunein http://bit.ly/2rRwDad

iHeart http://bit.ly/2E4fhfl

More about Edward here: https://www.edwardmlerner.com/

Tags: Edward M Lerner, Edward Lerner Science Fiction, Augmented Humanity, AI, Super intelligence, Cyborgs, Neural Networks, Artificial Intelligence, Dystopia, Innovation, Innovation Show, Robots v humans, robotics, nanobots