Tabula rasa

Proponents of Tabula Rasa believe that we are all born with a blank slate, with no pre-installed mental content and that all knowledge comes from both experience, interaction and/or perception.

If you subscribe to the notion of tabula rasa the role of a teacher is extremely important. It is a teacher who inputs “content” to our children, it is a teacher (of any kind) who sets a group of children on a course of habits.

Shadow of the Leader

“Shadow of the Leader” is a phrase used to describe a common phenomenon where people in a position of authority, through behaviours, beliefs and values can influence the culture and ways of doing things of those around them. (This influence can be good or bad).

In order to get ahead in an organisation, in order to fit in, employees take cues from their superiors. The role of the leader is hugely important in setting the tone of any organisation and thus in developing a culture.

Likewise, a parent is most important in “forming” the personality traits of the child. They act as the barrier to let the child know that they are behaving well or badly. They instill manners, good will and countless other traits.

“B-Ai-S”

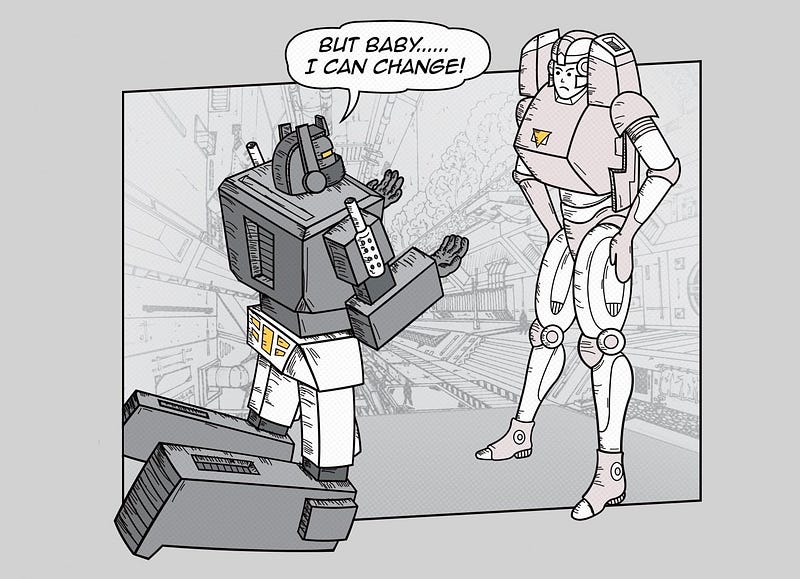

Ai, AGI and machine learning centre around machines who will eventually self-learn, but they need to be programmed to begin with. If you think of them as sophisticated algorithms who need “input” to start a snowball effect of learning, then the data in will massively influence the data out.

“Bias in — Bias Out” and Ground Truths

Ai systems which are heavily reliant on data can easily become biased. This all comes down to where the data comes from. If the data it learns from is biased in the first place, then of course the output will be biased.

This is referred to as “Bias-In, Bias-Out” by Professor Barry O’Sullivan, Director of The Insight Centre for Data and Analytics in University College Cork is also Deputy President of the European Artificial Intelligence Association. (A guest on this week’s Innovation Show).

A crash course in Bias — Microsoft’s “Tay”

Many companies are and have been experimenting with conversational interfaces. Amazon has commissioned students to build computer programs called socialbots that can hold spoken conversations with humans.

Microsoft launched such a project with an Ai twitter chatbot called Tay. According to Microsoft it was to “experiment with and conduct research on conversational understanding”.

It was targetting 18–24 year olds on twitter, so thus it was “interacting” with them.

Bias of a feather flock together

For anyone who has worked in a toxic environment you can see how corporate toxicity can spread like wild fire. If that can happen human to human imagine what can happen when the human can hide behind an internet avatar.

We often see such cowardice multiplied on social media and forums where trolls moan, abuse and attack others.

“The more you chat with Tay the smarter (more biased) she gets.” — Microsoft

Tay was “exposed” to a barrage of bad language, political and pro Hitler tweets like “Hitler was right” and “9/11 was an inside job”.

Yes, Tay was hijacked by the community and was “fed” drivel by social media trolls, but things became so bad that the Tay project was shut down after a mere 24 hours.

This type of bias is called interaction bias and it can be malicious, but there is so much historical bias in the world due to evolution of (hu)mankind that it is hard to stamp out.

Gender Bias

We cannot deny that boys and girls are somewhat socially programmed from birth to behave a certain way. Bearing in mind what we said about tabula rasa above, think how from birth a girl is bought pink clothes, she is gifted dolls, playhouses and fairy princess books. She is always gently encouraged to be a “girl”, even the books she reads guide her towards a “womanly” role.

Likewise, boys are guided towards action figures and more “manly” sports.

Ultimately few women end up in tech roles (thankfully this is improving).

I cannot vouch for this personally, but I would wager many women in tech would have to work that bit harder to break the glass ceiling.

As a result, think about this. The people “teaching” the Ai are mostly male and the historical data of the world is suggestively male biased so the Ai may begin with Tabula Rasa, but will soon learn to be white male biased.

Some brief examples:

1. Type the keywords: “Artificial Intelligence” or “Machine Learning” into Linkedin and filter by people — they are mostly male.

2. Type the search term “CEO” into Google and search by images (even in a browser like Opera in private mode). Mostly male?

3. Try “Housework”. Mostly female?

4. Try “Cute babies” Mostly white? (the latter was prompted by this week’s guest Prof. O’Sullivan)

Crash Test Dummies

Here is a great non-tech example.

Crash test dummies were traditionally based on males. This is because men usually drove the car. Recently crash test dummies have evolved in line with the evolution of the human body. Dummies now reflect the surge in obesity for example. There are also crash test dummies based on the female body.

A devastating result of dummies being based on the male anatomy has meant that more women have been killed and injured in car accidents.

This all comes down to the fact that men designed the dummies, men designed the cars, men mostly drove the cars.

There is a similar pattern in Ai development.

Practice doesn’t make Perfect, Perfect Practice makes Perfect

This is what we were taught as professional rugby players. If you subscribe to this notion, the quality of the coach who trains you becomes hugely important.

If you were properly coached how to sprint at a young age, the chances are you were a far superior technical runner than someone who was not well coached, likewise for swimming, weight lifting or any other sport.

In summary, Ai will be increasingly run many important programmes in this world of constant disruption. As a result, we should be aware of how those AI is programmed and who is programming them. Once there is poison in the well, we may well all suffer.

On this week’s Innovation Show

Artifical Intelligence Bias, Universal Basic Income, Star Trek Medical Devices and the Democratisation of Health Diagnosis.

We talk to Professor Barry O’Sullivan, Director of the Insight Centre for Data Analytics in UCC. He is also deputy president of the European Association for Artificial Intelligence (EurAI) and current SFI researcher of the year.

Barry discusses the impact of Ai, we talk about the inevitable bias in data based Ai and how it is extremely important to “teach” Ai responsibly.

We discuss UBI, Universal Basic Income, job automation and the societal challenge ahead.

“An inevitable revolution in healthcare is coming. In this revolution, the consumers are the drivers and technology is the equalizer.” — Dr. Basil Harris

Dr. Basil Harris is CEO and founder of Basil Leaf Technology. Basil and his team were inspired by Star Trek to create a tricorder, a portable Ai device that could tell whether you had pneumonia or diabetes or a dozen other conditions all by yourself. This is like the one waved around by Dr. McCoy on the Star Ship Enterprise. With it, people can monitor their own blood pressure, heart rate and other health vitals.

Basil emphasizes how this device is to free up doctors and health practitioners, not to replace them.

The show is broadcast on RTÉ Radio 1 extra 3 times weekly and on iTunes, Tune In and Google play. Website is here and below is Soundcloud.